MLOps Engineer.

From prototyping models to orchestrating data, services, and infra.

Model to cloud, observability included

Streams, ROS2 & fast backends

Docker, CI/CD, infra as code

Experience

Recent work & research

- Redesigned SharedAirDFW with Earth Engine + Geemap to give UTD ownership and simplify workflows.

- Merged four microservices into one Flask + Leaflet + MQTT pipeline, cutting maintenance overhead.

- Automated Quarto/Jupyter charting to double data-viz throughput for pollution research.

- Designed ROS2 fusion module for GNSS/IMU/LiDAR to improve localization accuracy.

- Reduced pose-estimation complexity ~50% with streamlined fusion algorithms.

- Migrated GPS odometry to a hybrid pipeline, improving accuracy from ~3 m to < 1 m on 10-min drives.

- Showed synthetic audio can improve CLAP accuracy by ~6% in low-data settings.

- Built PyTorch eval suite (mAP on OpenMIC-2018; cosine sim on GTZAN).

- Created LLM prompt pipeline from a keyword corpus to boost dataset diversity.

- FastAPI + PostgreSQL ingestion handling 20k+ daily requests with low latency.

- Flask + React dashboard in Docker improved reporting speed by ~40%.

- GraphQL endpoint reduced over-fetching between backend and frontend.

About

Hey! I’m Akilan. I am a Junior at the University of Texas at Dallas studying Computer Science. I love building where autonomy, audio, and ML meet—and then pushing those ideas to cloud scale so they actually ship.

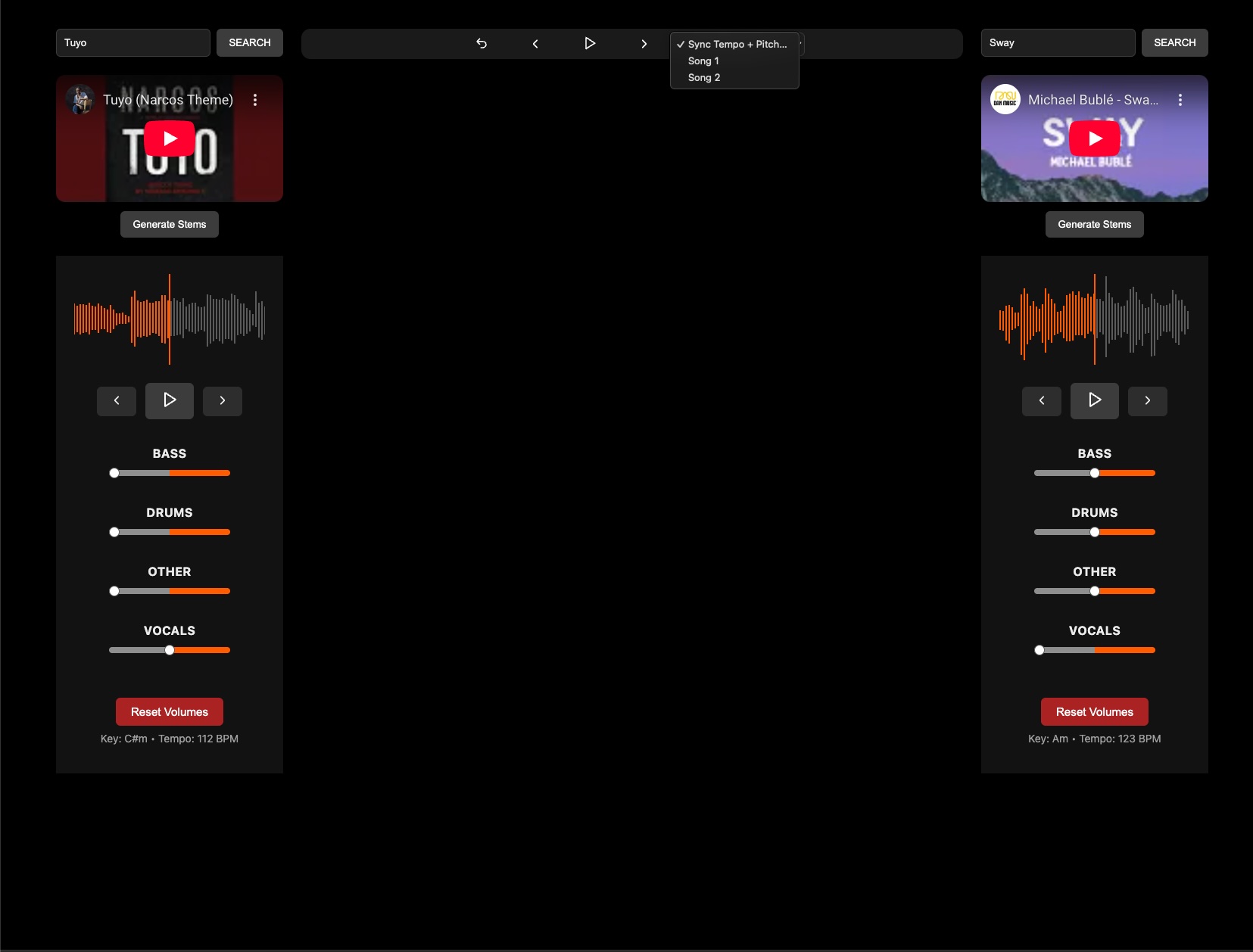

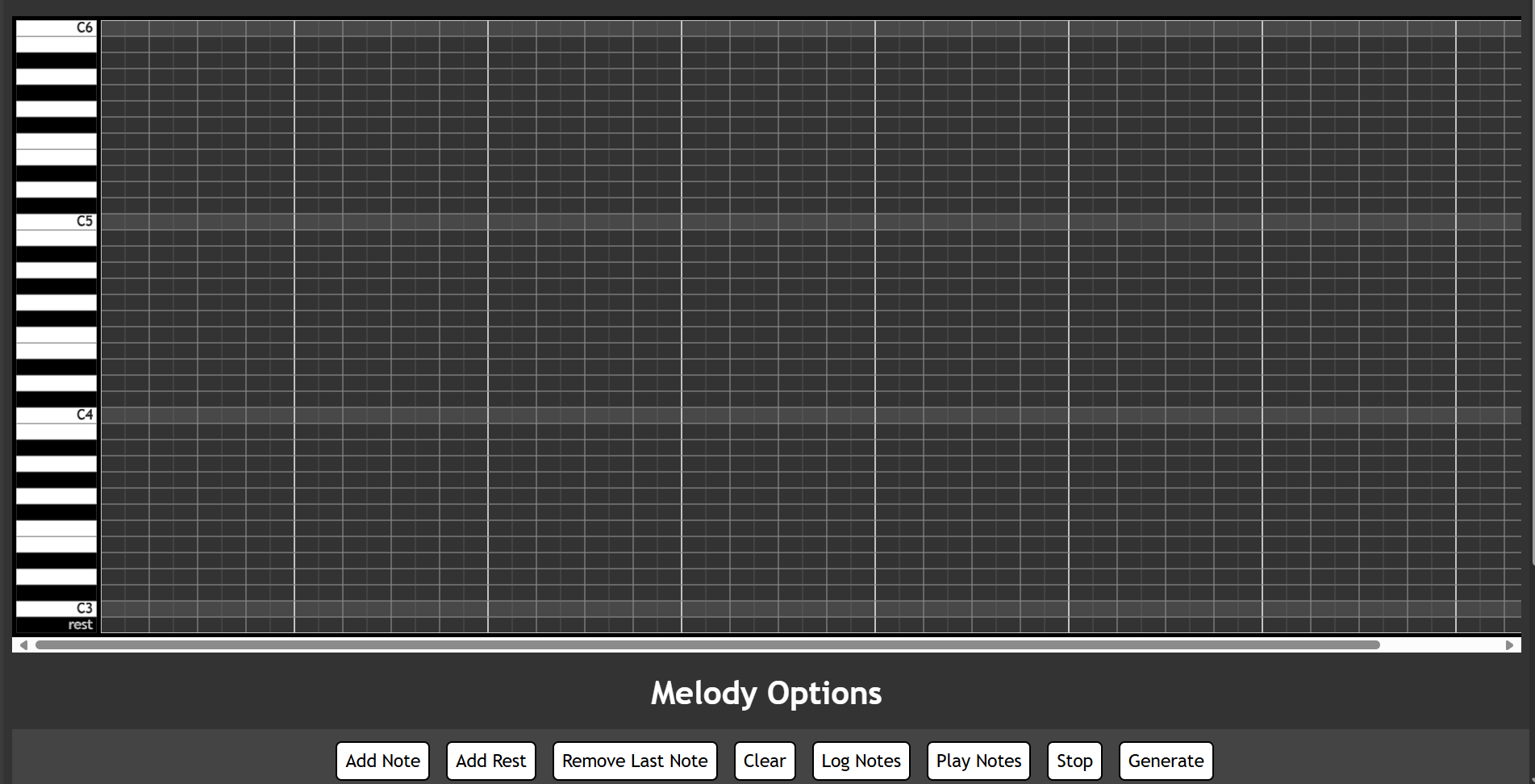

On the autonomy side I’ve built ROS2 sensor-fusion pieces (GNSS/IMU/LiDAR) and tooling that turns experiments into stable services. On the backend side I’ve consolidated microservices into cleaner pipelines (Flask + Leaflet + MQTT) and stood up FastAPI + Postgres stacks with solid DX and observability. In audio, I’ve shipped a web stem-separator (Demucs + Celery/Redis) and a melody-prediction RNN with response-time budgets in mind, plus a CLAP study exploring data-scarce training.

Research: I presented a poster on using synthetic data to train a CLAP model—and showed that, in low-data settings, synthetic examples can actually help the model learn useful audio-text alignments.

Right now: sketching agentic workflows for customer support—triage, suggested replies, and routing—so teams can do more with fewer human agents while keeping quality and feedback loops tight.

How I work: prototype fast, productionize intentionally, ship behind flags, add traces/metrics from day one, and write docs that future-me actually thanks me for.

Outside of work it’s music, soccer, and TV shows (Ozark is peak). I also watch football—nobody’s matching the Detroit Lions’ work ethic and team culture. Music-wise I bounce between old ’80s and modern picks like Lola Young and Twenty One Pilots—I switch genres like clothes.

When I need a reset, I speed-solve Rubik’s cubes and then get back to building.

Projects

A few focused builds

DJSplitter

FeaturedWeb-based stem separation + two-deck mixer. Demucs backend with Flask/Celery/Redis; React/Vite UI with Tone.js.

MelodyGen

FeaturedRNN melody generator (TensorFlow) with a simple web demo; composes short sequences from prior notes.

TicTacToeAI

FeaturedUnbeatable Tic-Tac-Toe using MiniMax with alpha-beta pruning; clean reference implementation.

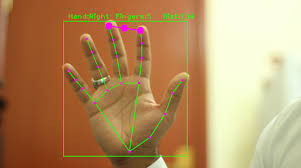

GestureController

FeaturedTouch-free volume & brightness using webcam hand gestures. MediaPipe + OpenCV with a lightweight Flask bridge.

Skills

Scroll to view faster